Building Competence and Assessing Performance

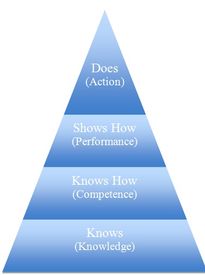

From medical schools to customer service environments to corporate leadership, most educators understand that professional knowledge, while a necessary foundation, is not enough to assure professional effectiveness. In any profession knowledge is simply the beginning of the process as indicated by Figure 1 below, adapted from George E. Miller, MD.

Using the acquired knowledge ‘on the job‘ requires opportunities to practice integrating that knowledge in realistic, but low-stakes, settings.

Additionally, research and experience tell us that excellent communication and interpersonal skills are equally important to vocational success. Effective provider-patient communication has been linked to improved patient adherence and clinical outcomes. Research also suggests a link between poor interpersonal skills and an increase in malpractice suits.

The most efficient and cost effective means for insuring the integration of knowledge and professional practices is through the incorporation of experiential learning and assessment. Encounters with standardized professionals provide repeated and focused opportunities for practice followed by clear, caring and constructive feedback. Equally crucial, SP programs provide reliable, valid assessment of trainees which not only highlights deficiencies in knowledge, competence and performance but can also point to areas where there might be gaps in the curriculum.

The SP Program at Penn Med is a leader in providing high level programs which offer standardized encounters to facilitate trainees’ progress as they combine knowledge and performance with the ultimate goal of effective action.

From ASPE Association of Standardized Patient Educators Newsletter

How Do We Know SPs Do a Better Job Evaluating Learners Than Non SPs?

SP educators are proud of their training methods and ability to produce SPs that can be relied upon to rate learners effectively during events, including high-stakes OSCEs. At times we need to reinforce the fact that trained SPs do a great job if we are given the resources to train them properly.

In this study, Drs. David Dickter, Sorrel Stielstra, and Matthew Lineberry evaluated if SPs are able to rate learner performance as well as trained non-SPs. They found that SP “ratings were significantly more reliable than non-SPs’” even though both groups had received equivalent levels of training. More fuel for our fire, though we all wish our SPs were not so routinely referred to as “actors” in such a wide variety of media, even scholarly publications.

Full citation: Dickter DN, Stielstra S, Lineberry M. Interrater reliability of standardized actors versus nonfactors in a simulation based assessment of interprofessional collaboration. Simul Health c10(4):249-55. 2015. PMID: 26098494.