Research Projects

AI-4-AI bridges medicine, biomedical informatics, engineering, and social science to design and test innovative, real-world solutions for ambulatory and personal healthcare. We focus on using digital data and platforms to improve how care is delivered and experienced. |

Project Themes:

- Building data resources: Creating large, privacy-compliant repositories of multimodal clinical data to support diverse research communities

- Advancing privacy methods: Developing cutting-edge approaches to de-identify clinical video and audio so these resources can be shared safely across institutions.

- Fostering collaboration: Hosting multidisciplinary forums where clinicians, researchers, and technologists critically examine clinical encounters and health records to inspire improvements in workflows and care delivery.

Current Projects

Team: Sriharsha Mopidevi, Matthew Hill, Basam Alasaly, Rachel Wu

The Observer Repository compiles multimodal data—including videos, audio, annotations, and surveys—collected from clinical visits across diverse healthcare settings, such as Penn Medicine departments and the Penn Medicine Clinical Simulation Center. By providing privacy-compliant access to previously hard-to-obtain clinical data, the repository supports both medical and non-medical researchers in advancing healthcare studies and innovation.

Team: Sriharsha Mopidevi

MedVidDeID is a six-stage modular and scaleable pipeline designed to de-identify personal health information (PHI) from raw audio and video recordings of clinical visits. Using a combination of Natural Language Processing (NLP), computer vision, and algorithms, the de-identification process removes PHI from transcripts, audio, and video (including faces and/or explicit body parts). This ensures that the data can be securely accessed and utilized by researchers across institutions for analysis and research.

Team: Sydney Pugh, Matthew Hill, Sy Hwang, Rachel Wu

Addressing the significant underdiagnosis of Alzheimer’s disease, the WATCH study aims to identify key diagnostic clues for assessing cognitive impairment risk. In collaboration with board-certified neurologists, this project harnesses large language models and multimodal systems to analyze patients' linguistic patterns, visual cues, and electronic health records (EHR). Eventually this project seeks to develop an AI-driven tool capable of real-time detection from clinical visits or natural speech, offering personalized follow-up recommendations based on assessment results. By enabling earlier diagnosis and intervention, the WATCH study has the potential to enhance patient outcomes and alleviate the burden of undetected cognitive decline.

Current Team: Sameer Bhatti, Amisha Jain

Past Contributors: Kuk Jin Jang, Chimezie Maduno, Alexander Budko

The ACAM project is focused on enhancing clinical interactions by facilitating real-time agenda setting between clinicians and patients. Its goals are to track the discussions and reduce the chances of unaddressed or unexpected topics brought up during the visit. By leveraging advanced language models (LLMs), ACAM aims to improve the efficiency and effectiveness of patient-provider interactions, ensuring that critical issues are addressed in every conversation.

Team: Matthew Hill, Basam Alasaly, Sameer Bhatti, Kevin Johnson

Ambient scribing technology transcribes clinical encounters in real time, aiming to streamline documentation tasks and alleviate provider workload. These set of projects aim to evaluate ambient scribes’ effect on documentation efficiency and patient-centered care by:

- Examining how ambient scribes influence provider-patient communication through their interactions, time allocation during visits, electronic health record engagement, and satisfaction metrics.

- Exploring methods to accurately extract medical concepts from clinical notes using natural language processing and large language model pipelines.

- Investigating content quality and detail in ambient-scribe-produced documentation, focusing on note bloat, standardized quality metrics, and clinical value.

Current Team: Andrew Zolensky, Basam Alasaly, Sriharsha Mopidev

Past Contributors: Kuk Jin Jang

This project develops a model that can assign speaker roles to utterances in a real-time, inpatient clinical setting to improve live captioning and documentation. Finetuning decision trees and Large Language Models (LLMs), it explores the effectiveness of diarization—segmenting and grouping speech by speaker—to infer speaker roles directly from semantic cues. By integrating transcription, segmentation, and role classification, the system enhances live captioning, real-time decision support, and automatic documentation. This work improves communication for hearing-impaired patients, streamlines clinical workflows, and advances intelligent healthcare assistance.

Team: Jean Park

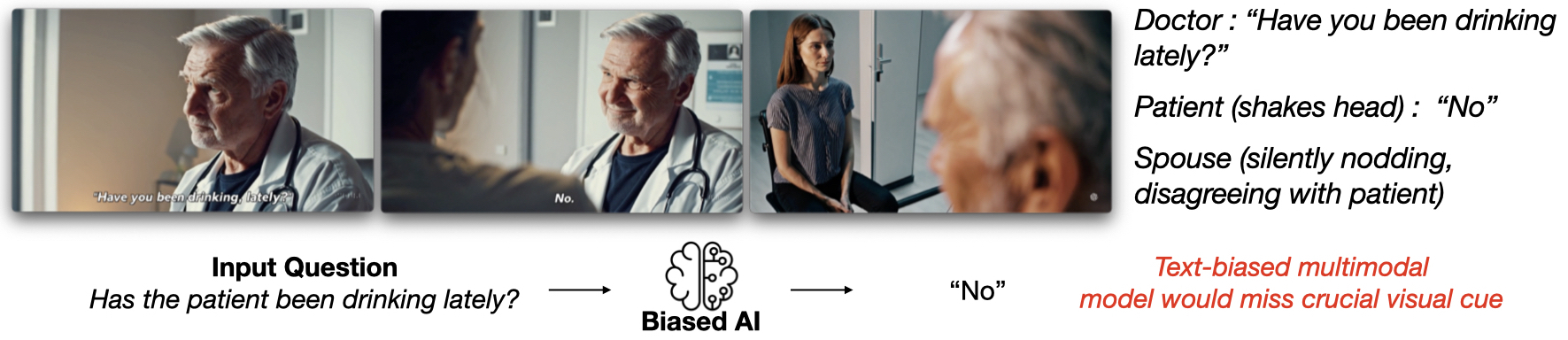

This study analyzes multimodal integration in Video Question Answering (VidQA) datasets using a novel Modality Importance Score derived from Multi-modal Large Language Models (MLLMs). Our critical analysis of popular VidQA benchmarks reveals an unexpected prevalence of questions biased toward a single modality, lacking the complexity that would require genuine multimodal integration. This exposes limitations in current dataset designs, suggesting many existing questions may not effectively test models' ability to integrate cross-modal information. Our work guides the creation of more balanced datasets and improves assessment of models' multimodal reasoning capabilities, advancing the field of multimodal AI.

Team: Amogh Ananda Rao, Patricia Chen, Sydney Pugh

PCP-Bot is a conversational AI tool designed to streamline pre-visit planning in primary care. By guiding patients through natural dialogue, it captures patient health histories and converts them into structured summaries for seamless EHR integration. In doing so, PCP-Bot can replace traditional questionnaires and enhance clinical workflows while maintaining an empathetic patient experience. Once developed, we plan to validate PCP-Bot in clinical settings, expand its use across different medical environments, and enhance its conversation strategies to improve provider-patient interactions.

Past Projects

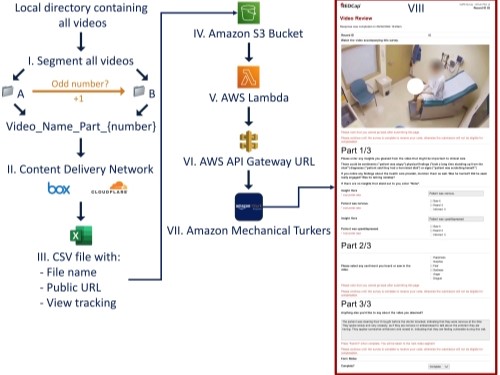

Team: Kuk Jang, Basam Alasaly

Team: Kuk Jang, Basam Alasaly

CLIPS uses crowdsourcing techniques to identify opportunities to improve ambulatory care using AI and advanced technology and to identify broadly interesting observations after watching snippets of clinic visits. These data both provide opportunities for computational research and generate metadata about the role audio versus video plays in identifying clinical insights.

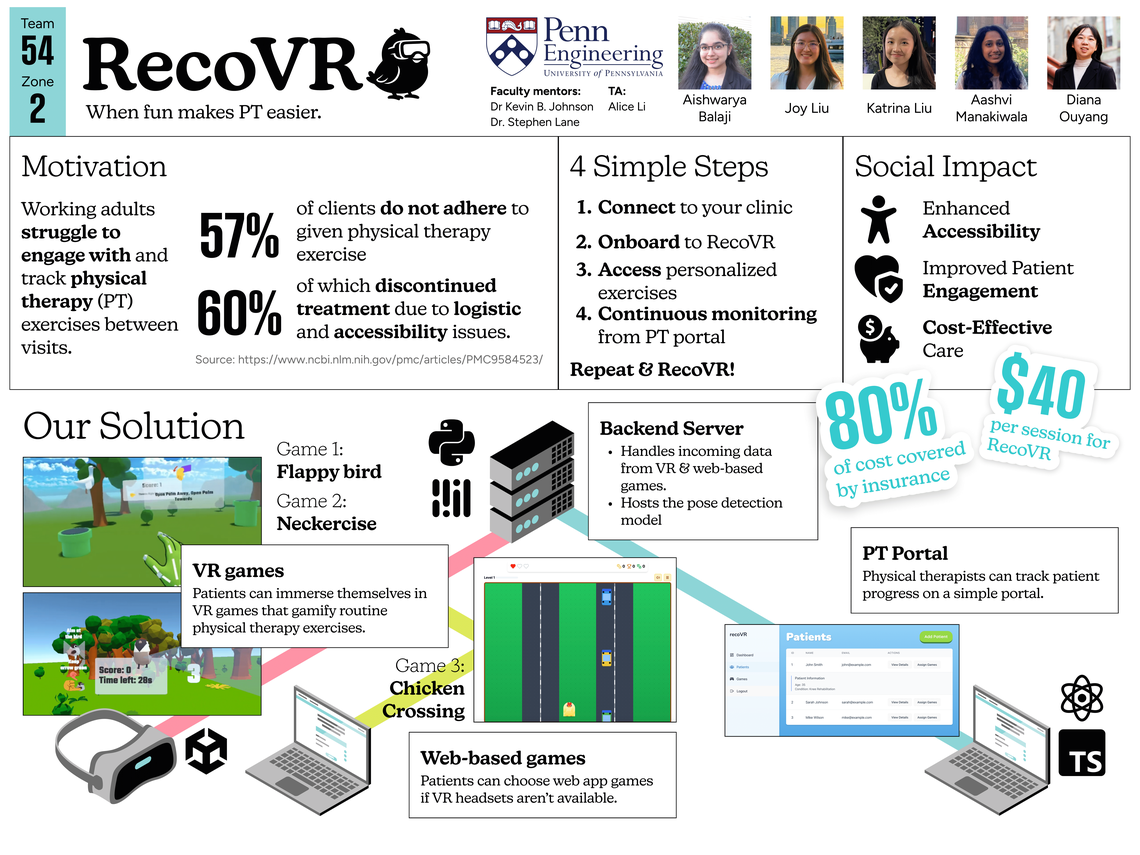

Penn Engineering Undergraduate Senior Design Project

Current Team: Yanbo Feng, Kuk Jang

This project leverages body movement analysis from de-identified video datasets such as Dem@Care to predict dementia. Building on well-established research linking gait—traditionally focused on lower-body movement—to dementia, this work expands the scope to full-body motion. Using advanced computer vision methods to measure both motion and posture among patients, the project aims to enhance early detection and deepen our understanding of dementia-related motor changes.

ReportThank you to our funders