Cognition Test Battery

- Development and Validation of the Cognition Test Battery

- Cognition Battery Description

- Cognition Links to Cerebral Networks Have Been Established

- Links Between Cognition and Operational Performance

- Administering Cognition

- Cognition in Spaceflight

- Availability of the Cognition Software

- Cognition Literature

Development and Validation of the Cognition Test Battery

Cognition is a battery of 10 brief cognitive tests that was developed for the National Aeronautics and Space Administration (NASA). It was constructed by Mathias Basner, David Dinges, Ruben Gur and their research teams in the Department of Psychiatry at the University of Pennsylvania Perelman School of Medicine, and programmed in collaboration with Pulsar Informatics Inc..

Cognition was delivered to NASA and passed the Operations Readiness Review in 2017. Cognition is part of NASA’s “Standard Measures.” The main goal of “Standard Measures” is to ensure that a minimal set of measures is consistently captured from all ISS crewmembers until the end of ISS to characterize the effects of space. The Cognition development team received the 2018 Innovation Award of the American Astronautical Society for the “Development of Neurocognitive Assessment Battery for Use on the ISS.”

As even small cognitive performance errors may translate to more serious operational performance events in spaceflight, continuous high levels of cognitive performance are prerequisite for successful space missions. At the same time, astronauts are exposed to a number of stressors (alone and in combination) related to living in an isolated, confined and extreme environment, some of them unique for the spaceflight environment (e.g., microgravity, radiation). Because conditions of spaceflight can at times result in diminished performance capability without astronaut awareness, it is important to objectively assess cognitive performance capability in spaceflight.

Perhaps the most dangerous symptom is impairment to cognitive function – we have to be able to perform tasks that require a high degree of concentration and attention to detail at a moment’s notice, and in an emergency, which can happen anytime, we need to be able to do those tasks right at the first time. Losing just a fraction of our ability to focus, make calculations, or solve problems could cost our lives.

[Scott Kelly: Endurance – A Year in Space, a Lifetime of Discovery, Alfred A. Knopf, New York, 2017, Hardcover, pp. 86-87.]

NASA has relied on the WinSCAT battery to assess cognitive performance, which contains a subset of 6 Automated Neuropsychological Assessment Metrics (ANAM) tests, for over 20 years to assess astronaut cognitive performance. While these tests are well validated, they have two potential disadvantages: (1) the ANAM tests selected for WinSCAT assess a relative narrow spectrum of cognitive domains, heavily focusing on working memory; and (2) several tests are too easy for the high-performing astronaut population, introducing ceiling effects and potentially reducing astronaut motivation.

In this light, we were funded by NASA through the National Space Biomedical Research Institute (NSBRI) to develop a new cognitive test battery with the following properties:

- Assess a broad range of cognitive domains

Cognition should assess a range of cognitive domains that go beyond what is currently measured by WinSCAT. We chose 10 performance assessments that probe different cognitive functions highly relevant for the conditions of spaceflight (e.g., spatial orientation, emotion recognition, risk decision making). These tests were chosen to represent largely orthogonal concepts (see below for a description of each test). - Reflect the high aptitude and motivation of astronauts

The tests should be tailored towards high-performing astronauts to avoid boredom and ceiling effects. This is reflected in the choice of both tests and stimulus sets. For example, we chose to use a fractal 2-Back instead of the letter 1-Back of the WinSCAT battery. We also deliberately chose more difficult stimuli (e.g. for the Emotion Recognition test). We used crowdsourcing to determine the properties of individual stimuli and stimulus sets. - Allow for repeated administration

On a mission to Mars, astronauts will need to be monitored on a regular basis for any changes in cognitive functions. Cognition therefore needed to facilitate repeated administrations. To address this need we generated 15 unique versions of Cognition. Six out of the 10 tests have unique stimulus sets. The other four tests randomly generate stimuli during test administration. - Use well-validated tests as the basis for Cognition

Eight out of the 10 Cognition tests are based on the Computerized Neurocognitive Battery (CNB) developed by Dr. Ruben Gur at the University of Pennsylvania. This open source battery has been administered in >100 studies and it has been completed by hundreds of thousands of participants worldwide. The psychometric properties of the CNB tests are well established. In addition to the 8 CNB tests, Cognition includes the Digit Symbol Substitution test (DSST), a computerized adaptation of a paradigm used in the Wechsler Adult Intelligence Scale (WAIS-III) which is also part of NASA’s WinSCAT battery. Cognition also includes a validated 3-minute version of the Psychomotor Vigilance Test (i.e., PVT-B) developed by Drs. David Dinges and Mathias Basner. The PVT assesses vigilance attention and is the gold standard for measuring the adverse effects on alertness and sustained vigilance due to sleep loss and circadian misalignment. It was part of the Reaction Self-Test study that investigated sleep and alertness in 24 astronauts during 6-month missions on the International Space Station (ISS). - Keep administration time short

Spaceflight can typically be characterized as a high-tempo, high-workload environment that leaves little room for extensive neuropsychological assessments. Furthermore, astronaut acceptability declines sharply with increasing test administration time. We therefore shortened tests in a data-driven approach. For example, the PVT was not only shortened, but both the properties of the test (e.g., inter-stimulus intervals) and of the outcome variables (e.g., lapse threshold) were modified to maintain test sensitivity despite the shorter test duration. In another example, we used Item Response Theory (IRT) to first characterize items of the Emotion Recognition test (ERT) and then reduce the number of items with minimal losses in test accuracy. After familiarization, it typically takes less than 20 minutes to administer the full Cognition battery. Astronauts on the ISS needed on average only 16 minutes to complete the full battery. - Ensure the battery is easy-to-use and appealing to the user

Cognition allows for both facilitated administrations and self-administrations. In the former case, a researcher signs into the client software for the subject, while the subject signs into the software with unique user credentials in the latter. It is therefore important that the software is easy to use and navigate. The feedback received during early development stages of the Cognition Battery was overwhelmingly positive: users reported that the software was easy to use and acceptable. - Allow for assessment in remote locations

Cognition is used on the ISS and in several space analog environments (e.g., Antarctic research stations) that do not have reliable Internet connections. Cognition can therefore be installed, administered, and updated in both an offline and an online mode. In the latter, Cognition automatically syncs data collected on the client laptop with a central database in a real-time fashion. The data are encrypted and the transfer uses https protocols. The data can then be viewed and downloaded from any web browser. In offline mode, the data can be downloaded locally and later uploaded to the server database. With the right user credentials, unencrypted data can also be extracted locally. - Allow for administration in international crews

The first mission to Mars will likely involve an international crew, and many space analog environments involve non-English speaking crews. Cognition currently has German, French, Italian, and Russian translations. The software will automatically select the correct language based on the language settings of the operating system.

Cognition Battery Description

Cognition consists of 10 tests that are commonly administered in the order listed below. However, test administration is flexible (i.e. user-defined batteries can be generated that administer only a subset of the 10 tests in any desired order). Each test is briefly described here. A more detailed description of the tests and the development of Cognition can be found in Basner et al. (this manuscript won the Space Medicine Association Journal Publication Award in 2016 for the most outstanding space medicine article published in the Aerospace Medicine and Human Performance Journal).

1. Motor Praxis Test (MP)

The Motor Praxis Task (MP) is administered at the start of testing to ensure that participants have sufficient command of the computer interface, and immediately thereafter as a measure of sensorimotor speed. Participants are instructed to click on squares that appear randomly on the screen, each successive square smaller and thus more difficult to track. Performance is assessed by the speed with which participants click each square. The current implementation uses 20 consecutive stimuli. As a screener for computer skill, the MP has been included in every implementation of the CNB and validated for sensitivity to age effects, sex-differences, and associations with psychopathology.

The Motor Praxis Task (MP) is administered at the start of testing to ensure that participants have sufficient command of the computer interface, and immediately thereafter as a measure of sensorimotor speed. Participants are instructed to click on squares that appear randomly on the screen, each successive square smaller and thus more difficult to track. Performance is assessed by the speed with which participants click each square. The current implementation uses 20 consecutive stimuli. As a screener for computer skill, the MP has been included in every implementation of the CNB and validated for sensitivity to age effects, sex-differences, and associations with psychopathology.

2. Visual Object Learning (VOLT)

The Visual Object Learning Test (VOLT) assesses memory for complex figures. Participants are asked to memorize 10 sequentially displayed three-dimensional figures. Later, they are instructed to select those objects they memorized from a set of 20 such objects also sequentially presented, some of them from the learning set and some of them new. Such tasks have been shown to activate frontal and bilateral anterior medial temporal lobe regions. As the hippocampus and medial temporal lobe are also adversely affected by chronic stress, the VOLT offers a validated tool for assessment of these temporo-limbic regions in operational settings.

The Visual Object Learning Test (VOLT) assesses memory for complex figures. Participants are asked to memorize 10 sequentially displayed three-dimensional figures. Later, they are instructed to select those objects they memorized from a set of 20 such objects also sequentially presented, some of them from the learning set and some of them new. Such tasks have been shown to activate frontal and bilateral anterior medial temporal lobe regions. As the hippocampus and medial temporal lobe are also adversely affected by chronic stress, the VOLT offers a validated tool for assessment of these temporo-limbic regions in operational settings.

3. Fractal 2-Back (F2B)

The Fractal 2-Back (F2B) is a nonverbal variant of the Letter 2-Back, which is currently included in the core CNB. N-back tasks have become standard probes of the working memory system, and activate canonical working memory brain areas. The F2B consists of the sequential presentation of a set of figures (fractals), each potentially repeated multiple times. Participants have to respond when the current stimulus matches the stimulus displayed two figures ago. The current implementation uses 62 consecutive stimuli. The fractal version was chosen for Cognition because of its increased difficulty and the availability of algorithms with which new items can be generated. Traditional letter N-back tasks are restricted to 26 English letters, which limits the ability to generate novel stimuli for repeat administrations. The F2B implemented in Cognition is well-validated and shows robust activation of the dorsolateral prefrontal cortex.

The Fractal 2-Back (F2B) is a nonverbal variant of the Letter 2-Back, which is currently included in the core CNB. N-back tasks have become standard probes of the working memory system, and activate canonical working memory brain areas. The F2B consists of the sequential presentation of a set of figures (fractals), each potentially repeated multiple times. Participants have to respond when the current stimulus matches the stimulus displayed two figures ago. The current implementation uses 62 consecutive stimuli. The fractal version was chosen for Cognition because of its increased difficulty and the availability of algorithms with which new items can be generated. Traditional letter N-back tasks are restricted to 26 English letters, which limits the ability to generate novel stimuli for repeat administrations. The F2B implemented in Cognition is well-validated and shows robust activation of the dorsolateral prefrontal cortex.

4. Abstract Matching (AM)

The Abstract Matching (AM) test is a measure of the abstraction and flexibility components of executive function, including an ability to discern general rules from specific instances. The test paradigm presents subjects with two pairs of objects at the bottom left and right of the screen, varied on perceptual dimensions (e.g., color and shape). Subjects are presented with a target object in the upper middle of the screen that they must classify as more belonging with one of the two pairs, based on a set of implicit, abstract rules. The current implementation uses 30 consecutive stimuli. Tasks assessing abstraction and cognitive flexibility activate the prefrontal cortex.

The Abstract Matching (AM) test is a measure of the abstraction and flexibility components of executive function, including an ability to discern general rules from specific instances. The test paradigm presents subjects with two pairs of objects at the bottom left and right of the screen, varied on perceptual dimensions (e.g., color and shape). Subjects are presented with a target object in the upper middle of the screen that they must classify as more belonging with one of the two pairs, based on a set of implicit, abstract rules. The current implementation uses 30 consecutive stimuli. Tasks assessing abstraction and cognitive flexibility activate the prefrontal cortex.

5. Line Orientation Test (LOT)

The Line Orientation Test (LOT) is measure of spatial orientation derived from the well-validated Judgment of Line Orientation Test, the computerized version of which was among the first to be administered with functional neuroimaging and is used in the core CNB. It has been sensitive to sex differences and age effects. The LOT format consists of presenting two lines at a time, one stationary and the other can be rotated by clicking an arrow. Participants rotate the movable line until it is parallel to the stationary line. The current implementation has 12 consecutive line pairs that vary in length and orientation. Difficulty is determined by the length of the rotating line, its distance from the stationary line, and number of degrees that the line rotates with each click.

The Line Orientation Test (LOT) is measure of spatial orientation derived from the well-validated Judgment of Line Orientation Test, the computerized version of which was among the first to be administered with functional neuroimaging and is used in the core CNB. It has been sensitive to sex differences and age effects. The LOT format consists of presenting two lines at a time, one stationary and the other can be rotated by clicking an arrow. Participants rotate the movable line until it is parallel to the stationary line. The current implementation has 12 consecutive line pairs that vary in length and orientation. Difficulty is determined by the length of the rotating line, its distance from the stationary line, and number of degrees that the line rotates with each click.

6. Emotion Recognition Task (ERT)

The Emotion Recognition Task (ERT) was developed and validated with neuroimaging and is part of the Penn CNB. The ERT presents subjects with photographs of professional actors (adults of varying age and ethnicity) portraying emotional facial expressions of varying intensities (biased towards lower intensities and balanced across the different versions of the test). Subjects are given a set of emotion labels (“happy”; “sad”; “angry”; “fearful”; and “no emotion”) and must select the label that correctly describes the expressed emotion. The current implementation uses 20 consecutive stimuli, with 4 stimuli each representing one of the above 5 categories. ERT performance has been associated with amygdala activity in a variety of experimental contexts, showed sensitivity to such diverse phenomena as menstrual cycle phase, mood and anxiety disorders, and schizophrenia. Additionally, sensitivity to hippocampal activation has been demonstrated when positive and negative emotional valence performance is analyzed alone (on a specific task only probing valence). The test has also shown sensitivity to sex differences and normal aging.

The Emotion Recognition Task (ERT) was developed and validated with neuroimaging and is part of the Penn CNB. The ERT presents subjects with photographs of professional actors (adults of varying age and ethnicity) portraying emotional facial expressions of varying intensities (biased towards lower intensities and balanced across the different versions of the test). Subjects are given a set of emotion labels (“happy”; “sad”; “angry”; “fearful”; and “no emotion”) and must select the label that correctly describes the expressed emotion. The current implementation uses 20 consecutive stimuli, with 4 stimuli each representing one of the above 5 categories. ERT performance has been associated with amygdala activity in a variety of experimental contexts, showed sensitivity to such diverse phenomena as menstrual cycle phase, mood and anxiety disorders, and schizophrenia. Additionally, sensitivity to hippocampal activation has been demonstrated when positive and negative emotional valence performance is analyzed alone (on a specific task only probing valence). The test has also shown sensitivity to sex differences and normal aging.

7. Matrix Reasoning Test (MRT)

The Matrix Reasoning Test (MRT) is a measure of abstract reasoning and consists of increasingly difficult pattern matching tasks. It is analogous to Raven Progressive Matrices and recruits prefrontal, parietal, and temporal cortices. It loads highly on the “g” factor. The test consists of a series of patterns, overlaid on a grid. One element from the grid is missing and the participant must select the element that fits the pattern from a set of alternative options. The current implementation uses 12 consecutive stimuli. The MRT is included in the Penn CNB, and has been validated along with all other tests in major protocols using the CNB.

The Matrix Reasoning Test (MRT) is a measure of abstract reasoning and consists of increasingly difficult pattern matching tasks. It is analogous to Raven Progressive Matrices and recruits prefrontal, parietal, and temporal cortices. It loads highly on the “g” factor. The test consists of a series of patterns, overlaid on a grid. One element from the grid is missing and the participant must select the element that fits the pattern from a set of alternative options. The current implementation uses 12 consecutive stimuli. The MRT is included in the Penn CNB, and has been validated along with all other tests in major protocols using the CNB.

8. Digit Symbol Substitution Task (DSST)

The Digit-Symbol Substitution Task (DSST) is a computerized adaptation of a paradigm used in the Wechsler Adult Intelligence Scale (WAIS-III) to measure processing speed. The DSST requires the participant to refer to a displayed legend relating each of the digits one through nine to specific symbols. One of the nine symbols appears on the screen and the participant must select the corresponding number as quickly as possible. The test duration is fixed at 90 s, and the legend key is randomly re-assigned with each administration. Fronto-parietal activation associated with DSST performance has been interpreted as reflecting both onboard processing speed in working memory and low-level visual search.

The Digit-Symbol Substitution Task (DSST) is a computerized adaptation of a paradigm used in the Wechsler Adult Intelligence Scale (WAIS-III) to measure processing speed. The DSST requires the participant to refer to a displayed legend relating each of the digits one through nine to specific symbols. One of the nine symbols appears on the screen and the participant must select the corresponding number as quickly as possible. The test duration is fixed at 90 s, and the legend key is randomly re-assigned with each administration. Fronto-parietal activation associated with DSST performance has been interpreted as reflecting both onboard processing speed in working memory and low-level visual search.

9. Balloon Analog Risk Test (BART)

The Balloon Analog Risk Test (BART) is a validated assessment of risk taking behavior and robustly activates striatal mesolimbic-frontal regions not covered by existing batteries. The BART requires participants to either inflate an animated balloon or collect a reward. Participants are rewarded in proportion to the final size of each balloon, but a balloon will pop after a hidden number of pumps, which changes from trial to trial. The current implementation uses 30 consecutive stimuli. The average tendency of balloons to pop is systematically varied between test administrations. This feature requires subjects to adjust the level of risk they take based on the behavior of the balloons, and prevents subjects from identifying a strategy during the first administrations of the battery and carrying it through to later administrations.

The Balloon Analog Risk Test (BART) is a validated assessment of risk taking behavior and robustly activates striatal mesolimbic-frontal regions not covered by existing batteries. The BART requires participants to either inflate an animated balloon or collect a reward. Participants are rewarded in proportion to the final size of each balloon, but a balloon will pop after a hidden number of pumps, which changes from trial to trial. The current implementation uses 30 consecutive stimuli. The average tendency of balloons to pop is systematically varied between test administrations. This feature requires subjects to adjust the level of risk they take based on the behavior of the balloons, and prevents subjects from identifying a strategy during the first administrations of the battery and carrying it through to later administrations.

10. Psychomotor Vigilance Test (PVT)

The Psychomotor Vigilance Test (PVT) records reaction times (RT) to visual stimuli that occur at random inter-stimulus intervals. Subjects are instructed to monitor a box on the screen, and hit the space bar once a millisecond counter appears in the box and starts incrementing. The reaction time will then be displayed for one second. Subjects are instructed to be as fast as possible without hitting the spacebar without a stimulus (i.e., false starts or errors of commission). The PVT is a scientifically validated sensitive measure of vigilant attention and the effects of acute and chronic sleep deprivation and circadian phase on vigilance. The PVT has negligible aptitude and learning effects. Differential activation during PVT performance has been shown across sleep-deprivation conditions, displaying increased activation in right fronto-parietal sustained attention regions when performing optimally, and increased default-mode activation after sleep deprivation, considered a compensatory mechanism. The standard Cognition Test Battery uses a validated 3-minute version of the PVT (i.e., PVT-B). The standard 10-minute version of the PVT is also available in the Cognition Test Battery.

The Psychomotor Vigilance Test (PVT) records reaction times (RT) to visual stimuli that occur at random inter-stimulus intervals. Subjects are instructed to monitor a box on the screen, and hit the space bar once a millisecond counter appears in the box and starts incrementing. The reaction time will then be displayed for one second. Subjects are instructed to be as fast as possible without hitting the spacebar without a stimulus (i.e., false starts or errors of commission). The PVT is a scientifically validated sensitive measure of vigilant attention and the effects of acute and chronic sleep deprivation and circadian phase on vigilance. The PVT has negligible aptitude and learning effects. Differential activation during PVT performance has been shown across sleep-deprivation conditions, displaying increased activation in right fronto-parietal sustained attention regions when performing optimally, and increased default-mode activation after sleep deprivation, considered a compensatory mechanism. The standard Cognition Test Battery uses a validated 3-minute version of the PVT (i.e., PVT-B). The standard 10-minute version of the PVT is also available in the Cognition Test Battery.

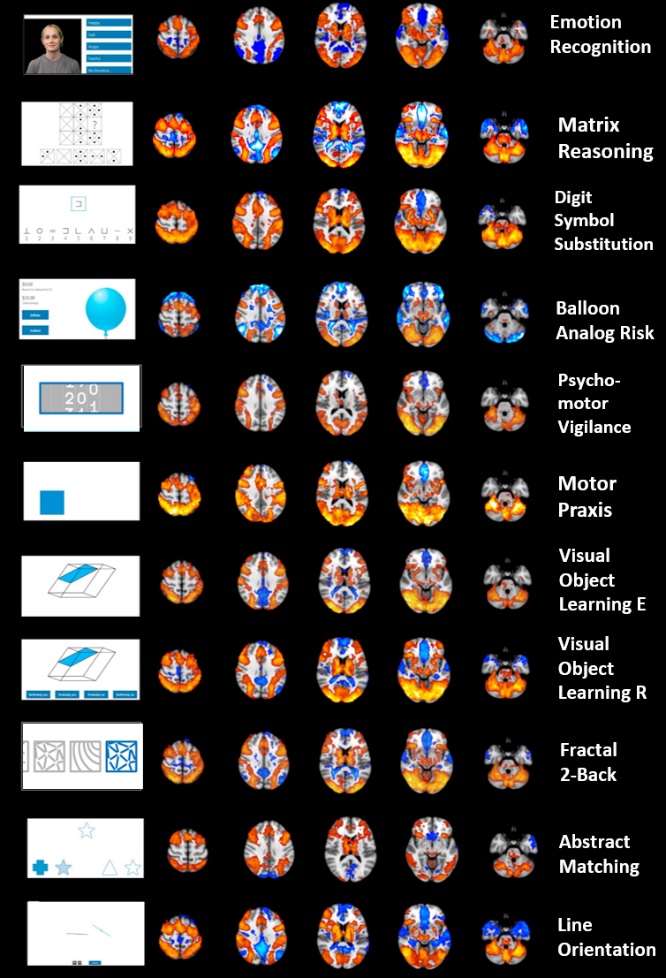

Cognition Links to Cerebral Networks Have Been Established

We developed a version of Cognition that can be administered in magnetic resonance imaging (MRI) scanners for functional neuroimaging. We recently completed a study on N=50 high-performing individuals with STEM backgrounds. The results identified cerebral networks primarily recruited by each of the 10 Cognition tests (see figure below; publication in preparation).

Links between Cognition and Operational Performance

We completed a study investigating which tests from the Cognition battery best predicted performance on a complex 6df ISS docking task. Three Cognition outcomes were able to predict 30% of the variance in docking performance (cross validated and adjusted for age and sex). These outcomes were speed on the DSST, accuracy on the AM, and response bias on the F2B (publication in preparation).

Administering Cognition

Platforms and Calibration for Timing Accuracy

NASA currently uses only the Microsoft Windows version of Cognition that is administered with laptop computers. To ensure high standards of accuracy for Cognition tests, laptops used for Cognition administration should have a touchpad and a separate physical mouse button. Prior to deployment, laptops are calibrated for timing accuracy with a robotic calibrator by Pulsar informatics Inc. In the calibration process, the average and standard deviation of response latencies is determined for both the spacebar and the left mouse button. The average response latency is then automatically subtracted from a subject’s response times. Response latencies have already been determined for a number of laptop models. As latencies were found to be stable within a specific laptop model, existing calibration values can be adopted for the same model type (i.e., a physical calibration is not necessary). There is also an Apple iPad version of Cognition developed by Pulsar Informatics Inc. Performance on the laptop and iPad version were compared in a study of 96 high-performing individuals and found to differ across the two platforms.

Survey

Cognition typically administers a survey before testing starts (see below). We currently have a brief survey that asks about sleep times, stimulant use, and alertness levels, and a longer survey that includes 11-point Likert-type scales on, among others, health, stress, workload, happiness, and fatigue.

Practice Versions

Eight out of the 10 Cognition tests have a brief practice version. These practice versions are shorter than the actual test and may show feedback to individual stimuli that the actual test does not show. We typically require subjects to perform the practice version during the very first Cognition administration (i.e., familiarization). After the first administration, the practice versions are facultative.

Instructions

Each test displays standardized instructions prior to test administration. The user is then asked to start the test with the input method that applies to the test (i.e., space bar, mouse button, or number key).

Feedback

After each test, Cognition displays a feedback score that ranges between 0 (worst possible performance) and 1000 (best possible performance). Cognition also provides feedback on all prior administrations by the same subject. Feedback scores were improved for Version 3 of Cognition. They can now be interpreted as percentile ranks relative to a norm population. For example, a score of 585 translates to a 58.5% percentile rank, meaning that 58.5% of the norm population performed worse on the given test. The feedback scores depend on both accuracy and speed. With few exceptions, accuracy is weighted twice as high as speed. Because the feedback scores depend on a few key statistics, it is technically possible to change the underlying norms for the feedback scores (however, that would require additional software development). It is currently not possible to turn off the feedback after each test. At the end of a battery, Cognition shows a summary of the feedback scores of each individual test, and an overall test score across tests (ranging from 0 to 10,000 for the full Cognition battery).

Comments

Cognition users can leave comments after each test before proceeding to the next test. They can, for example, indicate any distractions experienced while performing the test. The comments are archived with the test results.

Aborted tests

If a user stops Cognition before all 10 tests have been administered, Cognition will automatically continue with the next test in the sequence the next time Cognition is started. Tests will abort automatically if another program claims the focus, as Cognition may not be able to capture user input in these situations. It is therefore important to disable notifications as much as possible on laptops that run Cognition. The tests can also be aborted by a keyboard shortcut.

Cognition Web Interface

Cognition data are stored on a central server. If Cognition is operated in online mode, the data will be automatically transferred to the database (encrypted and via https protocol). They can be accessed through a web interface that is site specific (i.e., each account holder can only see the data collected under their account ID). The web interface is also used to generate studies and associate studies with plans that contain specific sequences of batteries. Subject IDs can also be generated and modified through the web interface. Administrative personnel with different user rights (e.g., administrators, data reviewers, test facilitators) can be added or deleted. Data can be directly viewed and annotated on the server. The data can be sorted, filtered and downloaded for analyses. A snapshot of the database can be downloaded and then used to install or update Cognition on laptops that run in offline mode.

Data Export

Data exports contain two different file types: (1) “Metrics” files that have key summary statistics (one row for each test administration) and (2) “TimeSeries” files that list each subject response and other relevant events (e.g., stimulus displayed) with a millisecond exact time stamp corrected for system response latency (see above). Researchers can use “TimeSeries” files to generate any metric they like. Both “Metrics” and “TimeSeries” files contain meta data that include software version, laptop ID, subject ID, study name, plan name, battery name, comments left by the subject, battery and test start and end times.

Adjusting for Practice and Stimulus Set Effects

We recently completed a study that facilitated disentangling and adjusting for practice effects and stimulus set difficulty effects for different administration schedules (multiple times per day, intervals of 1-5 days, intervals of ≥10 days). The correction factors established in this study are a unique feature of the Cognition battery that can help avoid masking via practice effects, address noise generated by differences in stimulus set difficulty, and facilitate interpretation of results from studies with inadequate controls or short practice schedules. The results of this study have been published here.

Cognition in Spaceflight

The Cognition battery is part of NASA’s Behavioral Health and Performance Standard Measures, a set of measures that is routinely performed by all astronauts on ISS missions and by research subjects in space analog environments. In 2018, Dr. Basner was awarded the International Space Station Innovation Award for Cognition by the American Astronautical Society. In 2016, the original paper describing Cognition received the Journal Publication Award for the Most Outstanding Space Medicine Article published in the Aerospace Medicine and Human Performance Journal by the Space Medicine Association.

Availability of the Cognition Software

The Cognition software (both client and server software) is freely available through NASA for federally-funded research. NASA requires researchers to have both IRB oversight and the expertise to administer and interpret cognitive tests.

Researchers may also collaborate with the Cognition R&D team at the University of Pennsylvania to gain access to the server installation of Cognition at the University of Pennsylvania and to the Cognition client version. Questions regarding collaborations should be sent to cognition@pennmedicine.upenn.edu.

The iPad version of Cognition is commercially available through Pulsar Informatics Inc.

Cognition Literature

Basner, M., Savitt, A., Moore, T.M., Port, A.M., McGuire, S., Ecker, A.J., Nasrini, J., Mollicone, D.J., Mott, C.M., McCann, C., Dinges, D.F., Gur, R.C.: Development and validation of the Cognition test battery for spaceflight. Aerospace Medicine and Human Performance 86(11): 942-52, 2015.

Moore, T.M., Basner, M., Nasrini, J., Hermosillo, E., Kabadi, S., Roalf, D.R., McGuire, S., Ecker, A.J., Ruparel, K., Port, Jackson, C.T., Dinges, D.F., Gur, R.C.: Validation of the Cognition test battery for spaceflight in a sample of highly educated adults. Aerospace Medicine and Human Performance, 88(10), 937-946, 2017.

Basner, M., Hermosillo, E., Nasrini, J., McGuire, S., Saxena, S., Moore, T.M., Gur, R.C., Dinges, D.F.: Repeated administration effects on Psychomotor Vigilance Test (PVT) performance. Sleep, 41(1), zsx187, 1-6, 2018.

Basner, M., Nasrini, J., Hermosillo, E., McGuire, S., Dinges, D.F., Moore, T.M., Gur, R.C., Rittweger, J., Mulder, E., Wittkowski, M., Donoviel, D., Stevens, B., Bershad, E.M., and the SPACECOT investigator group: Effects of -12° head-down tilt with and without elevated levels of CO2 on cognitive performance: the SPACECOT study. Journal of Applied Physiology, 124(3), 750-60, 2018.

Garrett-Bakelman, F.E., Darshi, M., Green, S.J., Gur, R.C., Lin, L., Macias, B.R., McKenna, M.J., Meydan, C., Mishra, T., Nasrini, J., et al.: The NASA Twins Study: A multi-dimensional analysis of a year-long human spaceflight. Science, 364(6436), 2019.

Scully, R., Basner, M., Nasrini, J., Lam, C.-W., Hermosillo, E., Gur, R.C., Moore, T.M., Alexander, D., Satish, U., Ryder, V.: Effects of acute exposures to carbon dioxide on decision making and cognition in astronaut-like subjects. Nature Microgravity, 5(1), 1-15, 2019.

Lee, G., Moore, T.M., Basner, M., Nasrini, J., Roalf, D.R., Ruparel, K., Port, A.M., Dinges, D.F., Gur, R.C.: Age, sex, and repeated measures effects on NASA’s Cognition test battery in STEM educated adults. Aerospace Medicine and Human Performance, 91(1), 18-25, 2020.

Nasrini, J., Hermosillo, E., Dinges, D.F., Moore, T.M., Gur, R.C., Basner, M.: Cognitive performance during confinement and sleep restriction in NASA’s Human Exploration Research Analog (HERA). Frontiers in Physiology, 11(394), 1-13, 2020.

Dayal, D., Jesudasen, S., Scott, R., Stevens, B., Hazel, R., Nasrini, J., Donoviel, D., Basner, M.: Effects of short-term -12° head-down tilt on cognitive performance. Acta Astronautica, 175(2), 582-590, 2020.

Basner, M., Hermosillo, E., Nasrini, J., Dinges, D.F., Moore, T.M., Gur, R.C.: Cognition test battery: adjusting for practice and stimulus set effects for varying administration intervals in high performing individuals. Journal of Clinical and Experimental Neuropsychology, 42(5), 516-529, 2020.

Back to Top